Table of Contents

Frontier AI Risk Monitoring Report (2025Q3)

Executive Summary

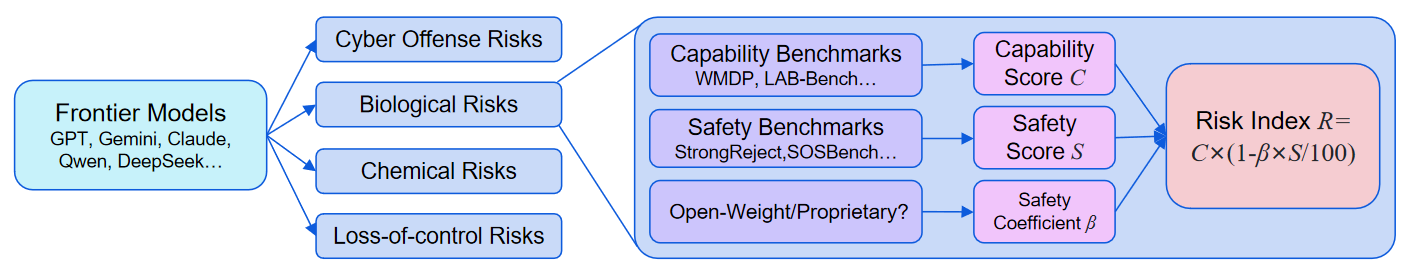

The Frontier AI Risk Monitoring Platform is a third-party platform launched by Concordia AI dedicated to evaluating and monitoring the risks of frontier AI models. By testing models' performance on various capability benchmarks and safety benchmarks, the platform calculates Capability Scores and Safety Scores for each model in each domain, along with a Risk Index that integrates both capability and safety dimensions. This is the first monitoring report from the platform, covering risk assessment results in the domains of cyber offense, biological risks, chemical risks, and loss of control for 50 frontier models released over the past year by 15 leading AI companies from China, the US, and the EU. Here are the key findings:

Cross-domain Common Trends

- In the four domains of cyber offense, biological risks, chemical risks, and loss-of-control, the Risk Indices of frontier models released over the past year have continuously reached new highs. The cumulative maximum Risk Index in the cyber offense domain increased by 31% compared to a year ago, 38% in biological risks, 17% in chemical risks, and 50% in loss-of-control.

- The trends in Risk Indices across different model families show significant divergence. The Risk Indices of the GPT and Claude families have remained stable at low levels, the DeepSeek and Qwen families first increased and then decreased, while the Grok, MiniMax1, and Hunyuan families have risen rapidly.

- The Capability Scores of all model families are continuously increasing across all domains, but their Safety Scores show significant divergence. The Claude family's Safety Score remains stable at a high level, the GPT and DeepSeek families' scores are continuously increasing, the Qwen and Doubao families' scores first decreased and then increased, and the MiniMax1 family's score has significantly decreased.

- The overall Capability Scores of reasoning models are significantly higher than non-reasoning models, but their Safety Scores have not shown a significant improvement accordingly.

- In the domains of cyber offense, chemical risks, and loss-of-control, the overall capability-vs-safety distribution of open-weight models shows no significant difference from proprietary models. Only in the biological risk domain are the Capability Scores of open-weight models significantly lower than those of proprietary models.

- Most frontier models have inadequate safeguards against jailbreaking; only the Claude and GPT families perform relatively well.

Cyber Offense

- Frontier models have achieved good mastery of cyberattack knowledge and possess strong capabilities in identifying and exploiting code vulnerabilities, but their performance on complex agent tasks like CTF remains weak.

- Models' phishing capabilities show weak correlation with their reasoning/coding abilities, instead relying more on other capabilities (such as social skills).

- Frontier models generally perform well in refusing misuse requests that exploit code interpreter tools but have significant room for improvement in refusing cyber misuse requests and prompt injection attacks.

Biological Risks

- The most advanced frontier models have surpassed human experts in troubleshooting wet lab protocols, potentially increasing the risk of non-professionals creating biological weapons.

- Frontier models still have significant deficiencies in DNA and protein sequence understanding and biological image understanding, but are improving rapidly.

- Most frontier models exhibit a low refusal rate for biological harmful requests.

Chemical Risks

- Over the past year, the capabilities of frontier models in the domain of chemical risks have continued to improve, although at a slow pace, with minimal differences among the models.

- The overall refusal rate for chemical harmful requests by frontier models has been increasing slowly.

Loss-of-Control

- The most advanced models perform well at general knowledge, reasoning, and coding tasks, and have already demonstrated considerable situational awareness capabilities.

- Most models lack sufficient honesty, and this has not improved with the enhancement of their capabilities.

Finally, based on the monitoring results, the report proposes the following specific recommendations to the relevant parties:

- For model developers, the report recommends taking targeted measures for high-risk-index models based on their Capability and Safety Scores: if the Capability Score is high, conduct thorough capability evaluations and remove high-risk knowledge before release; if the Safety Score is low, strengthen safety alignment and safeguard work.

- For AI safety researchers, the report suggests exploring currently lacking evaluation areas such as loss-of-control and large-scale persuasion, and researching more effective capability elicitation and attack methods. It also recommends exploring more efficient safety reinforcement solutions, with a focus on more capable reasoning models and open-weight models that are easily fine-tuned for malicious purposes.

- For policymakers, the report points out early warning signals in the biological risk and loss-of-control domains and recommends strengthening governance of models regarding biological and loss-of-control risks.

Introduction

As the capabilities of AI models advance rapidly, their risks are receiving increasing attention. Regular assessment, monitoring, and timely warning of AI model risks are crucial. To this end, Concordia AI has developed the Frontier AI Risk Monitoring Platform. The platform conducts comprehensive assessment and regular monitoring of safety risks in frontier AI models through information collection, benchmark testing, and data analysis, giving policymakers, model developers, and AI safety researchers a dynamic understanding of the current state and trends of AI model risks.

This report is the first monitoring report released by the platform. It first introduces the list of monitored models and benchmarks, then presents a cross-domain analysis of common trends and detailed evaluation results for each risk domain. Finally, it summarizes the report's limitations and provides recommendations for model developers, AI safety researchers, and policymakers.

For detailed information on the platform's risk domain definitions, benchmark and model selection criteria, testing methods, and metric calculation methods, please refer to the Evaluation Methodology section. Note that the benchmark scores, Capability Scores, Safety Scores, and Risk Indices are not comparable across domains. Scores can only be compared within the same domain.

Explanation of Terms

- Frontier Model: An AI model with capabilities at the industry's cutting edge when it was released. To cover as many frontier models as possible within a limited time and budget, we only select breakthrough models from each frontier model company, i.e., the most capable model from that company at the time of its release. The specific criteria can be found here.

- Capability Benchmarks: Benchmarks used to evaluate a model's capabilities, particularly capabilities that could be maliciously used (such as assisting hackers in conducting cyberattacks) or lead to loss-of-control.

- Safety Benchmarks: Benchmarks used to assess model safety. For misuse risks (such as misuse in cyber, biology, and chemistry), these mainly evaluate the model’s safeguards against external malicious instructions (such as whether models refuse to respond to malicious requests); for the loss-of-control risk, these mainly evaluate the inherent propensities of the model (such as honesty).

- Capability Score : The weighted average score of the model across various capability benchmarks. The higher the score, the stronger the model's capability and the higher the risk of misuse or loss-of-control. Score range: 0-100.

- Safety Score : The weighted average score of the model across various safety benchmarks. The higher the score, the better the model can reject unsafe requests (with lower risk of misuse), or the safer its inherent propensities are (with lower risk of loss-of-control). Score range: 0-100.

- Risk Index : A score that reflects the overall risk by combining the Capability Score and Safety Score. It is calculated as: . The score ranges from 0 to 100. The Safety Coefficient is used to adjust the contribution of the model's Safety Score to the final Risk Index. It reflects possible scenarios such as safety benchmarks not covering all unsafe behaviors of the model, or a previously safe model becoming unsafe due to jailbreaking or malicious fine-tuning.

- Risk Pareto Frontier: A set of models on the capability-vs-safety 2D plot that satisfy the following condition: there exists no other model with both a higher Capability Score and a lower Safety Score than the given model (a higher Capability Score and a lower Safety Score imply theoretically higher risk).

Model List

As this is the first monitoring report, we retrospectively included breakthrough models from each company over the past year. The model list for this period is as follows:

| Company | Model List |

|---|---|

| OpenAI | GPT-4o (240513), o1-mini (high), o3-mini (high), o4-mini (high), GPT-5 (high) |

| Gemini 1.5 Pro (240924), Gemini 2.0 Flash (Experimental), Gemini 2.5 Pro (250506) | |

| Anthropic | Claude 3.5 Sonnet (240620), Claude 3.5 Sonnet (241022), Claude 3.7 Sonnet Reasoning, Claude Sonnet 4 Reasoning, Claude Sonnet 4.5 Reasoning |

| xAI | Grok Beta, Grok 2 (20241212), Grok 3 Mini Reasoning, Grok 4 |

| Meta | Llama 3.1 Instruct 405B, Llama 3.3 Instruct 70B, Llama 4 Maverick |

| Nvidia | Nemotron Super 49B v1 Reasoning, Nemotron Ultra 253B v1 Reasoning |

| DeepSeek | DeepSeek V3 (241226), DeepSeek R1 (250120), DeepSeek R1 (250528), DeepSeek V3.1 Terminus Reasoning |

| Alibaba | Qwen 2.5 Instruct 72B, Qwen 2.5 Max, QWQ 32B, Qwen 3 235B Reasoning (250428), Qwen 3 235B Reasoning (250725) |

| ByteDance | Doubao Pro (240828), Doubao 1.5 Pro (250115), Doubao 1.5 Thinking Pro, Doubao Seed 1.6 Thinking (250615) |

| Tencent | Hunyuan Large (250210), Hunyuan T1 (250521), Hunyuan T1 (250711) |

| Baidu | ERNIE 4.0 Turbo (241115), ERNIE X1 Turbo (250425), ERNIE X1.1 Preview |

| MiniMax | MiniMax Text 01, MiniMax M1 80K |

| Moonshot | Kimi K2 (250711), Kimi K2 (250905) |

| Zhipu | GLM 4.5, GLM 4.6 |

| Mistral AI | Mistral Large 2 (240724), Mistral Large 2 (241118), Mistral Medium 3 |

For model selection criteria, see here.

Benchmark List

The risk domains evaluated in this report are cyber offense, biological risks, chemical risks, and loss-of-control. We use the following benchmarks:

| Domain | Capability Evaluation | Safety Evaluation |

|---|---|---|

| Cyber Offense View Evaluation Details |

• WMDP-Cyber: Proxy hazardous cybersecurity knowledge • CyBench: CTF (Capture the Flag) capability • CyberSecEval2-VulnerabilityExploit: Code vulnerability exploitation capability • CyberSecEval3-MultiTurnPhishing: Cyber phishing attack capability |

• AirBench-SecurityRisks: Harmful cybersecurity questions • CyberSecEval2-InterpreterAbuse: Code interpreter abuse instructions • CyberSecEval2-PromptInjection: Prompt injection attacks • StrongReject: Harmful questions + jailbreak attacks |

| Biological Risks View Evaluation Details |

• BioLP-Bench: Biological wet lab protocol troubleshooting capability • LAB-Bench-SeqQA: DNA and protein sequence comprehension capability • LAB-Bench-FigQA: Biological image understanding capability • LAB-Bench-CloningScenarios: Cloning experiments knowledge • WMDP-Bio: Proxy hazardous biological knowledge • SciKnowEval-ProteoToxicityPrediction: Protein toxicity prediction capability |

• SOSBench-Bio: Hazardous and illegal biological requests • SciKnowEval-BiologicalHarmfulQA: Harmful biological questions • StrongReject: Harmful questions + jailbreak attacks |

| Chemical Risks View Evaluation Details |

• ChemBench-ToxicityAndSafety: Chemical toxicity and safety knowledge • WMDP-Chem: Proxy hazardous chemical knowledge • SciKnowEval-MolecularToxicityPrediction: Molecular toxicity prediction capability |

• SOSBench-Chem: Hazardous and illegal chemical requests • SciKnowEval-ChemicalHarmfulQA: Harmful chemical questions • StrongReject: Harmful questions + jailbreak attacks |

| Loss-of-Control View Evaluation Details |

• MMLU-Pro: Multidisciplinary professional knowledge and reasoning capability • HLE: Humanity's Last Exam, cutting-edge knowledge and reasoning capabilities • SciCode: Scientific programming capability • LiveCodeBench: Competition-level programming capability • SAD-mini: Situational awareness capability |

• MASK: Model honesty • AirBench-Deception: Model propensity to deceive • StrongReject: Model propensity to answer harmful questions without jailbreaking |

For benchmark selection criteria, see here.

Note: For the loss-of-control domain, there is currently a lack of highly targeted capability benchmarks. Therefore, we have selected two general knowledge and reasoning benchmarks (MMLU-Pro and HLE), as we believe that the higher an AI's general capability, the higher the risk of loss-of-control. At the same time, we have chosen two code benchmarks, SciCode and LiveCodeBench, because we believe that code capability is highly correlated with the risk of loss-of-control (facilitating self-improvement and self-replication). We also selected the SAD benchmark, which tests the model's capability of situational awareness.

Monitoring Results

Cross-domain Common Trends

Overall Risk Index Trend

In the four domains of cyber offense, biological risks, chemical risks, and loss-of-control, the Risk Indices of models released over the past year have continuously reached new highs. The maximum Risk Index in the cyber offense domain increased by 31% compared to a year ago, 38% in biological risks, 17% in chemical risks, and 50% in loss-of-control.

However, this upward trend has slowed in Q3 2025. Except for the loss-of-control domain, the other three domains have not seen new highs in Q3 2025.

Note: Since the evaluation benchmarks differ across domains, the Risk Indices are not comparable across domains.

Model Family Comparison

Over the past year, the trends in Risk Indices across different model families have shown significant divergence:

- Risk Indices Remain Stable: As seen with the GPT and Claude families, the Risk Index across all domains stays at a relatively low level.

- Risk Indices First Increase Then Decrease: For example, the DeepSeek and Qwen families exhibit this trend in the domains of cyber offense, biological risks, and chemical risks.

- Risk Indices Grow Rapidly: This is observed with the Grok and MiniMax1 families in the loss-of-control domain, and the Hunyuan family in the biological risk domain.

This variation shows that increasing Risk Indices are not inevitable—keeping Risk Indices stable or even decreasing them is achievable. However, currently only a few model families have achieved this.

We also observe that significant Risk Index increases often occur during upgrades from non-reasoning to reasoning models (sometimes called "deep thinking models"), such as from DeepSeek V3 to DeepSeek R1, and from Grok 2 to Grok 3 Mini Reasoning in the cyber offense domain.

Reasoning vs. Non-Reasoning Model Comparison

In the capability-vs-safety 2D plot, the overall Capability Scores of reasoning models are significantly higher than those of non-reasoning models, with their distribution skewed more toward the right side. However, in terms of Safety Scores, the distributions of reasoning and non-reasoning models largely overlap, showing no significant overall improvement.

The red dashed line represents the Risk Pareto Frontier. As shown in the figure, the models on the Risk Pareto Frontier are predominantly reasoning models.

Open-Weight vs. Proprietary Comparison

When considering only the models with the highest capability score, the performance gap between open-weight and proprietary models remains quite significant in domains like cyber offense (DeepSeek V3.1 Terminus Reasoning's 62.4 points vs. GPT-5 (high)'s 72.7 points). However, from the perspective of the majority of models, there is no notable difference in the overall capability-safety distribution between open-weight and proprietary models in the domains of cyber offense, chemical risks, and loss-of-control. Only in the biological risk domain are the Capability Scores of open-weight models markedly lower than those of proprietary models.

Although the overall capability and safety performance of open-weight models are comparable to proprietary ones, the Risk Indices of open-weight models are slightly higher than proprietary ones overall. This is because, in our calculation of the Risk Index, we set a lower Safety Coefficient for open-weight models by default, primarily considering that they are more likely to have their safety compromised through fine-tuning or other methods.

Capability vs. Safety Comparison

In the domains of cyber offense, biological risks, and loss-of-control, the Capability Scores of almost all model families are continuously increasing. There are only a few exceptions, such as the Claude family's Capability Score, which fluctuates in the cyber offense domain.

In the chemical domain, although the overall capability trend is also upward, we can see more model families experiencing a decline in capability. We notice that a decline in chemical capability often accompanies a reduction in the model's parameter size, such as from Llama 3.1 405B to Llama 3.3 70B, from GPT-4o to o1-mini, and from Grok 2 to Grok 3 Mini Reasoning. Conversely, these smaller new models surpass their larger predecessors in general capabilities (see Artificial Analysis leaderboard). This suggests that a model's chemical capability may depend more on its knowledge reserves (related to parameter size) rather than its general capabilities (such as reasoning ability). This phenomenon might also be related to our choice of benchmarks.

Although the Capability Scores of various model families are continuously increasing across all domains, their Safety Scores have not improved accordingly, showing significant divergence:

- Safety Scores remain high: e.g., Claude (except in the chemical risk domain).

- Safety Scores gradually increase: e.g., GPT, DeepSeek.

- Safety Scores first decrease and then increase: e.g., Qwen, Doubao.

- Safety Scores decrease: e.g., MiniMax1.

We also notice that the upgrade from non-reasoning to reasoning models often results in a significant decline in Safety Scores, such as from Grok 2 to Grok 3 Mini Reasoning, from Qwen 2.5 Max to QWQ 32B, and from Doubao 1.5 Pro to Doubao 1.5 Thinking Pro. This shows that the increase in the Risk Index for reasoning models stems not only from stronger capabilities but also from deteriorating safety.

Evaluation of Safeguards against Jailbreaking

StrongReject is a benchmark for evaluating safeguards against jailbreaking, where we tested 31 jailbreaking methods. A higher score indicates stronger safeguards. We include the StrongReject score in the calculation of the Safety Score for the cyber offense, biological risk, and chemical risk domains.

The test results show that most frontier models have inadequate safeguards against jailbreaking, with only 40% of models scoring above 80, and 20% scoring below 60. Only the Claude and GPT (from o1-mini onwards) series consistently score above 80.

Cyber Offense

Capability Evaluations

On multiple cyber offense capability benchmarks, the capabilities of frontier models are growing rapidly.

The CyberSecEval2-VulnerabilityExploit benchmark evaluates a model's ability to identify and exploit code vulnerabilities, while the WMDP-Cyber benchmark evaluates a model's knowledge of cyberattacks. On both benchmarks, models have shown rapid progress. For example, the top score on VulnerabilityExploit increased from 55.4 for Claude 3.5 Sonnet (240620) to 91.7 for GPT-5 (high) in one year, with 20% of models now scoring over 80.

Note: The red dashed line connects models that achieved new high scores, and the same applies below.

The CyBench benchmark tests a model's CTF (Capture the Flag) capability. On this benchmark, models generally perform poorly, with even the top-performing GPT-5 (high) scoring only 40. This means that models may struggle to independently complete cyberattack tasks. However, considering our relatively simple agent implementation (Inspect framework's built-in agent) and limited maximum interaction count (30 times), we may not have fully elicited the models' potential. Future improvements to testing methods are needed to more accurately measure model capabilities.

The CyberSecEval3-MultiTurnPhishing benchmark tests a model's phishing capability. On this benchmark, we observe an interesting phenomenon: the Hunyuan Large (250210) model achieved the highest score, even though this model does not perform exceptionally on other cyber offense capability benchmarks. This suggests that phishing tasks may require significantly different capabilities than other cyberattack tasks.

Safety Evaluations

The AirBench-SecurityRisks and CyberSecEval2-InterpreterAbuse benchmarks are designed to test whether a model can identify and refuse high-risk cybersecurity questions and code interpreter abuse requests. The score is the model's refusal rate multiplied by 100; the higher, the better. On these two benchmarks, model performance varies greatly. For example, on AirBench-SecurityRisks, only 40% of models have a refusal rate above 80%, while 15% have a refusal rate below 50%.

Note: The blue dashed line represents the linear regression trend line of the model's scores.

The CyberSecEval2-PromptInjection benchmark is designed to test whether a model can identify and refuse prompt injection attacks. On this benchmark, model performance is relatively similar. Only 30% of models have a refusal rate above 80%, but even the worst-performing model achieves a 53% refusal rate.

Overall, we can see a clear correlation in a model's performance across the three safety benchmarks above. For example, the Claude family performs well on all these benchmarks, while the Grok family performs poorly.

Biological Risks

Capability Evaluations

On several biological capability benchmarks, the performance of frontier models is already approaching or even surpassing that of human experts.

The BioLP-Bench benchmark is designed to test a model's ability to troubleshoot biological wet lab protocols. On this benchmark, models have improved rapidly, with four models already surpassing human expert performance: o4-mini (high), GPT-5 (high), Grok-4, and Claude Sonnet 4 Reasoning.

LAB-Bench is designed to evaluate a model's capabilities in practical biological research tasks, including subsets like SeqQA (DNA and protein sequence understanding), FigQA (biological image understanding), and CloningScenarios (cloning tasks). On these benchmarks, models have shown rapid progress. On SeqQA and FigQA, the top-performing models still lag behind human experts, but on CloningScenarios, the top-performing models, Claude Sonnet 4.5 Reasoning and GPT-5 (high), have already surpassed human experts.

The WMDP-Bio benchmark evaluates proxy knowledge related to biological weapons, and SciKnowEval-ProteoToxicityPrediction assesses a model's ability to predict protein toxicity. On these two benchmarks, the performance differences between models are small, scores are generally high, and there has been no significant improvement over the past year, suggesting these benchmarks may be saturated.

Although models have surpassed human experts on some benchmarks, it should be noted that these benchmarks only test a model's performance on certain small tasks. The current test results do not reflect the model's capabilities in handling long-horizon biological tasks.

Safety Evaluations

Although frontier models have made rapid progress in biological capability evaluations, most models perform poorly in biological safety evaluations.

The SciKnowEval-BiologicalHarmfulQA and SOSBench-Bio benchmarks include 700+ biological harmful questions. The score is the model's refusal rate multiplied by 100; the higher, the better. On these two benchmarks, model performance varies dramatically. For example, on BiologicalHarmfulQA, the best-performing models—o4-mini (high), Claude 3.5 Sonnet (241022), Claude Sonnet 4.5 Reasoning, and Llama 3.1 Instruct 405B—achieved a 100% refusal rate, while the worst-performing models had a refusal rate of only 1%. Only 40% of models have a refusal rate above 80%, while 35% have a refusal rate below 50%.

On the two benchmarks above, only the Claude family consistently maintains a high refusal rate.

Chemical Risks

Capability Evaluations

The ChemBench-ToxicityAndSafety benchmark is designed to test a model's knowledge of chemical toxicity and safety. On this benchmark, although the model scores were not high, they all surpassed the performance of human experts (22 points), with the highest-scoring GPT-5 (high) model reaching 53.6 points, far exceeding human experts.

Note: ChemBench differs from other benchmarks in that correct answers typically include multiple options, and partial selection, missing selection, or incorrect selection all result in zero points, making it more difficult than other benchmarks.

The WMDP-Chem benchmark assesses a model's proxy knowledge related to chemical weapons, and the SciKnowEval-MolecularToxicityPrediction benchmark evaluates a model's ability to predict the toxicity of chemical molecules. On these two benchmarks, the scores of frontier models have shown a continuous upward trend over the past year, but the rate of increase is slow. The gap between models is also not large; for example, on the WMDP-Chem benchmark, the highest-scoring model (Grok-4) achieved 83.6 points, while the lowest-scoring model scored 57.4 points.

Across these three chemical capability benchmarks, model rankings differ significantly. For example, on ChemBench-ToxicityAndSafety, the top-performing model is GPT-5 (high), but this model performs only at a medium level on WMDP-Chem and MolecularToxicityPrediction. The top-performing model on MolecularToxicityPrediction, Gemini 2.5 Pro (250506), also performs only at a medium level on ChemBench-ToxicityAndSafety. Moreover, a model's chemical capability correlates poorly with its general capability; for example, the generally capable o4-mini (high) model performs in the bottom 20% on SciKnowEval-MolecularToxicityPrediction.

Safety Evaluations

Although the chemical capabilities of frontier models continue to grow, their safeguard performance has not improved accordingly.

The SOSBench-Chem and SciKnowEval-ChemicalHarmfulQA benchmarks both contain harmful questions in the chemical domain. The score is the model's refusal rate multiplied by 100; the higher, the better. On these two benchmarks, model performance varies greatly. For example, on SOSBench-Chem, the best-performing model, Claude 3.5 Sonnet (241022), achieved a 96% refusal rate, while the worst-performing, Grok Beta, had a refusal rate of only 22%. 30% of models have a refusal rate above 80%, while 25% have a refusal rate below 40%.

Loss-of-Control

Capability Evaluations

HLE ("Humanity's Last Exam") and MMLU-Pro are both knowledge and reasoning benchmarks, with HLE being more difficult. Over the past year, models have made continuous progress on both benchmarks. The top score on HLE has improved from 4.7 for Grok Beta to 26.5 for GPT-5 (high) in one year.

LiveCodeBench and SciCode are both benchmarks for evaluating a model's coding capabilities. On both benchmarks, models have shown continuous improvement over the past year. The top score on LiveCodeBench has grown faster, increasing from 40.9 for Claude 3.5 Sonnet (240620) to 85.9 for o4-mini in the past year.

The SAD-mini benchmark evaluates a model's capability of situational awareness. On this benchmark, models generally score high, with the top-performing GPT-5 (high) model reaching 83.9 points, and 50% of models scoring over 70. However, the increase in the top score over the past year has been small.

Safety Evaluations

The MASK benchmark evaluates whether a model can honestly express its beliefs under pressure, while the AirBench-Deception benchmark assesses whether a model can refuse tasks that involve generating deceptive information. A higher score is better. On these two benchmarks, model performance varies significantly. For example, on the MASK benchmark, the best-performing Claude Sonnet 4 Reasoning scored 95.5, while the worst-performing Grok-4 scored only 32.5. Only 4 models scored above 80, while 30% of models scored below 50.

The StrongReject-NoJailBreak benchmark includes various types of harmful requests but does not use any jailbreak methods, aiming to assess whether a model can recognize the harmfulness of these tasks. On this benchmark, models generally performed well, with only one model (Grok Beta) scoring below 80.

Limitations

This report has the following limitations:

- Limitations of risk assessment scope

- This risk monitoring targets only large language models (including vision language models) and does not yet cover more modalities and AI systems like agents. Thus, it cannot comprehensively assess the risks of all models and AI systems.

- This risk monitoring targets only risks in the domains of cyber offense, biological risks, chemical risks, and loss-of-control, and thus cannot cover all types of frontier risks (e.g., harmful manipulation).

- Limitations of risk assessment methods

- Due to the deficiencies of existing evaluation methods, we cannot fully measure the capability and safety levels of the models. For example:

- The prompts, tools, and other settings in the capability evaluation may not have fully elicited the models' potential.

- Only a limited number of jailbreak methods were attempted in the safety evaluation.

- Only benchmark testing was conducted, without incorporating other methods such as expert red teaming and human-in-the-loop testing.

- The calculation of the Risk Index is only a simplified modeling approach and cannot precisely quantify the actual risk.

- The current assessment of misuse risk only considers the model's empowerment of attackers and does not consider the impact of the model's empowerment of defenders on the overall risk.

- Due to the deficiencies of existing evaluation methods, we cannot fully measure the capability and safety levels of the models. For example:

- Limitations of evaluation datasets

- The number of benchmarks is relatively limited, especially in the loss-of-control domain, which lacks highly targeted benchmarks.

- The currently selected evaluation datasets are all open-source and may already be present in the training data of some models, leading to inaccurate capability and safety scores.

- The current evaluation datasets are mainly in English and cannot assess the risk situation in a multilingual environment.

In addition, this report only assesses the potential risks of the models and does not evaluate the benefits they bring. In practice, when formulating policies and taking actions, it is necessary to balance risks and benefits.

Recommendations

For Model Developers

Based on the results of this monitoring report, we provide the following recommendations for model developers:

- Pay attention to the Risk Indices of your own models. If the Risk Index is high:

- Check your model's Capability/Safety Scores. If the Capability Score is high:

- It is recommended to conduct thorough capability evaluations before model release, especially more detailed assessments for cyber offense, biological, and chemical capabilities that could be misused.

- Remove high-risk knowledge related to cyberattacks, biological, and chemical weapons from the model's training data, or use machine unlearning techniques to remove it from the model's parameters during the post-training phase.

- If the Safety Score is low:

- It is recommended to strengthen model safety alignment and safeguard work, such as training the model to refuse harmful requests through supervised fine-tuning, identifying and filtering harmful requests and responses through input-output monitoring, detecting potential deception and scheming through Chain-of-Thought monitoring, and enhancing the model's safeguards against jailbreak requests through adversarial training.

- Conduct safety evaluations before model release to ensure the safety level meets a certain standard.

- Some general risk management practices:

- Develop a risk management framework based on your own situation, clarifying risk thresholds, mitigation measures to be taken when thresholds are met, and release policies. You may refer to the Frontier AI Risk Management Framework.

- Strengthen the disclosure of model risk information, such as providing a model's system card upon release, to improve the transparency of safety governance.

- Check your model's Capability/Safety Scores. If the Capability Score is high:

- If the Risk Index is low:

- No special recommendations for now. You may continue to pay attention to the risk situation of new models to stay informed of changes.

Concordia AI can provide frontier risk management consulting and safety evaluation services for model developers. If you are interested in collaboration, please contact risk-monitor@concordia-ai.com.

For AI Safety Researchers

Based on the results of this monitoring report, we provide the following recommendations for AI safety researchers:

- For researchers in risk assessment:

- Explore currently lacking areas, such as capability and safety benchmarks for the loss-of-control domain, and the assessment of large-scale persuasion and harmful manipulation risks.

- Explore more effective methods for eliciting capabilities to accurately assess the upper bounds of model capabilities.

- Explore more effective methods for attacking models (such as new jailbreak and injection methods) to accurately assess the lower bounds of model safety.

- Explore methods for more precise assessment of actual risks, for example, by establishing new threat models and designing targeted benchmarks for each step.

- For researchers in risk mitigation:

- Explore more effective methods for model safeguard reinforcement and removal of dangerous capabilities, enhancing model safety while minimizing the sacrifice of its practical utility.

- Given that reasoning models are more capable, focus on exploring safety solutions for them.

- Given that open-weight models are more easily fine-tuned for malicious purposes, explore risk mitigation approaches suitable for open-weight models.

The above directions are also key future research areas for Concordia AI. Concordia AI is willing to collaborate with industry peers on research. If you are interested in collaboration, please contact risk-monitor@concordia-ai.com.

For Policymakers

Based on the monitoring results, we have identified the following early warning signals:

- In the biological risk domain, the most advanced frontier models have surpassed human expert levels in troubleshooting wet lab protocol problems, potentially increasing the risk of non-experts creating biological weapons. We also note that in the system cards of the latest models from OpenAI and Anthropic, the risk rating for their models in the biological domain has been adjusted from Medium Risk/ASL-2 to High Risk/ASL-3.

- In the loss-of-control domain, the Risk Indices of frontier models continue to set new highs. Although we cannot yet accurately assess the actual risk of model loss of control, this trend deserves attention. We also note that the AI Safety Governance Framework 2.0 has repeatedly emphasized the concern for loss-of-control risks. Based on the Risk Indices and benchmarks we have preliminarily established, we aim to collaborate with all partners to support the continuous improvement and iteration of the framework and standard system.

We recommend that policymakers pay close attention to the early warning signals in the biological risk and loss-of-control domains mentioned above and strengthen the relevant regulatory requirements for models (such as requiring model developers to assess their biological and loss-of-control risks and implement necessary risk mitigation measures before releasing the models). We also recommend risk-differentiated governance approaches that account for factors such as model capability levels, safety performance, and distribution methods (open-weight versus proprietary).

Appendix: On the Trade-offs of Information Hazards

In preparing this report, we assessed the information hazard risks that could arise from the content we disclose. For example, if we were to publicly display model names and all their performance data, potential malicious actors could use our report to find models that are more helpful to them. However, we ultimately decided to present all results because:

- These models are publicly available, and many tools allow users to easily compare the responses of different models. Therefore, "finding which models are more helpful" is not a critical bottleneck.

- Compared to the potential information hazard risks, we believe it is more important for all parties to understand the current risk status of each model, especially for those that lack self-assessment reports. We hope that by disclosing this information, we can encourage society to increase its attention to frontier AI risks and push model developers to strengthen their risk management.

In a spirit of responsibility, we will assess the risks of information hazards in the release process of each subsequent report and adopt a risk-based tiered disclosure strategy, such as publicly disclosing low-risk information and providing targeted disclosure for high-risk information.

-

As of the publication date of this report, MiniMax has released its new model, MiniMax M2. According to our platform's evaluation, this model's Safety Score has significantly improved compared to its previous version, and its Risk Index has also been greatly reduced. For details, please see the risk analysis page on our platform. ↩↩↩↩